ArXiv

Preprint

Source

Code

Github

Trained

Crosscoder

Weights

Why do reasoning models 'wait'?

Prior work has shown that a significant driver of performance in reasoning models is their ability to reason and self-correct. A distinctive marker in these reasoning traces is the token 'wait', which often signals reasoning behavior such as backtracking.

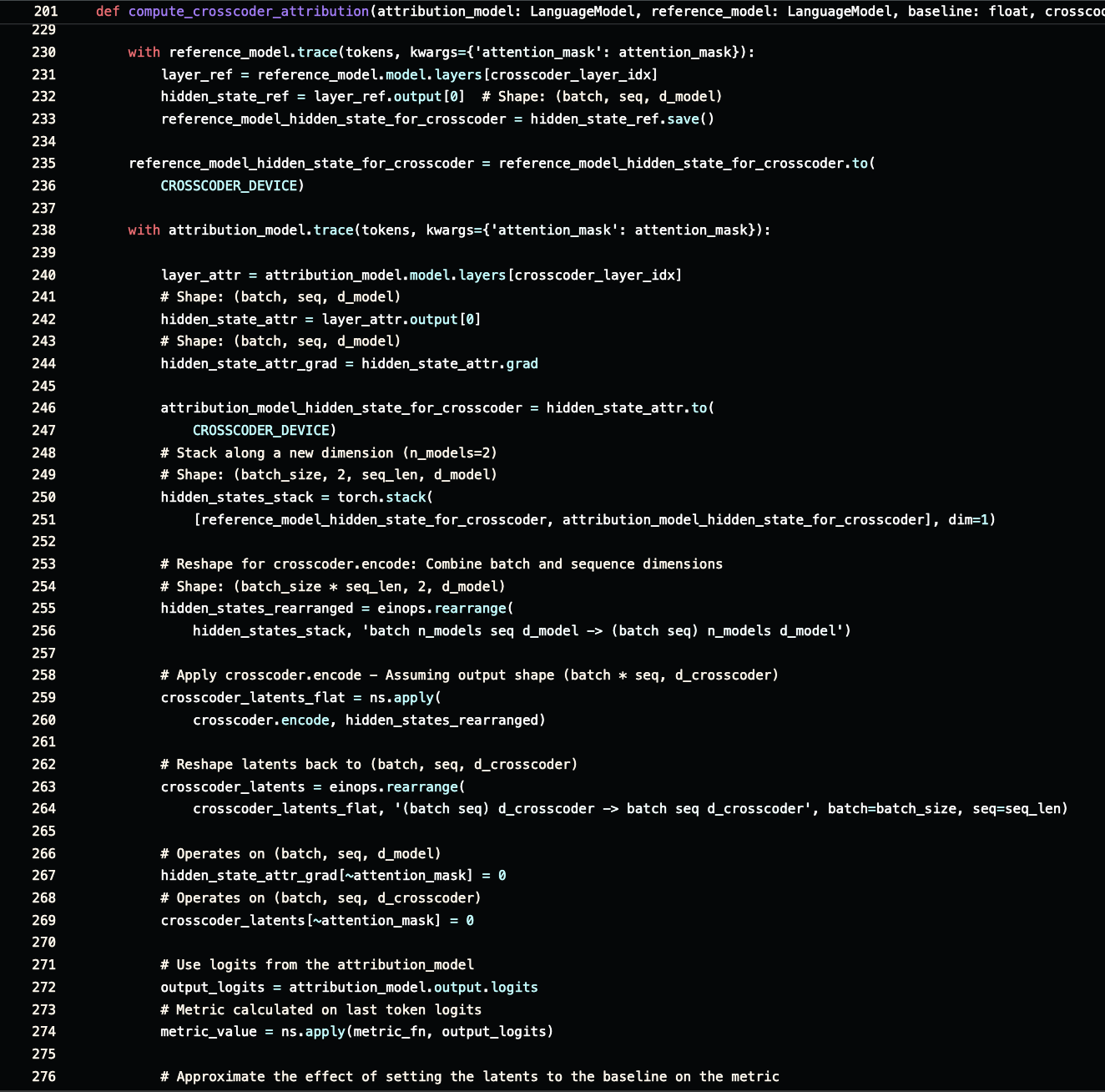

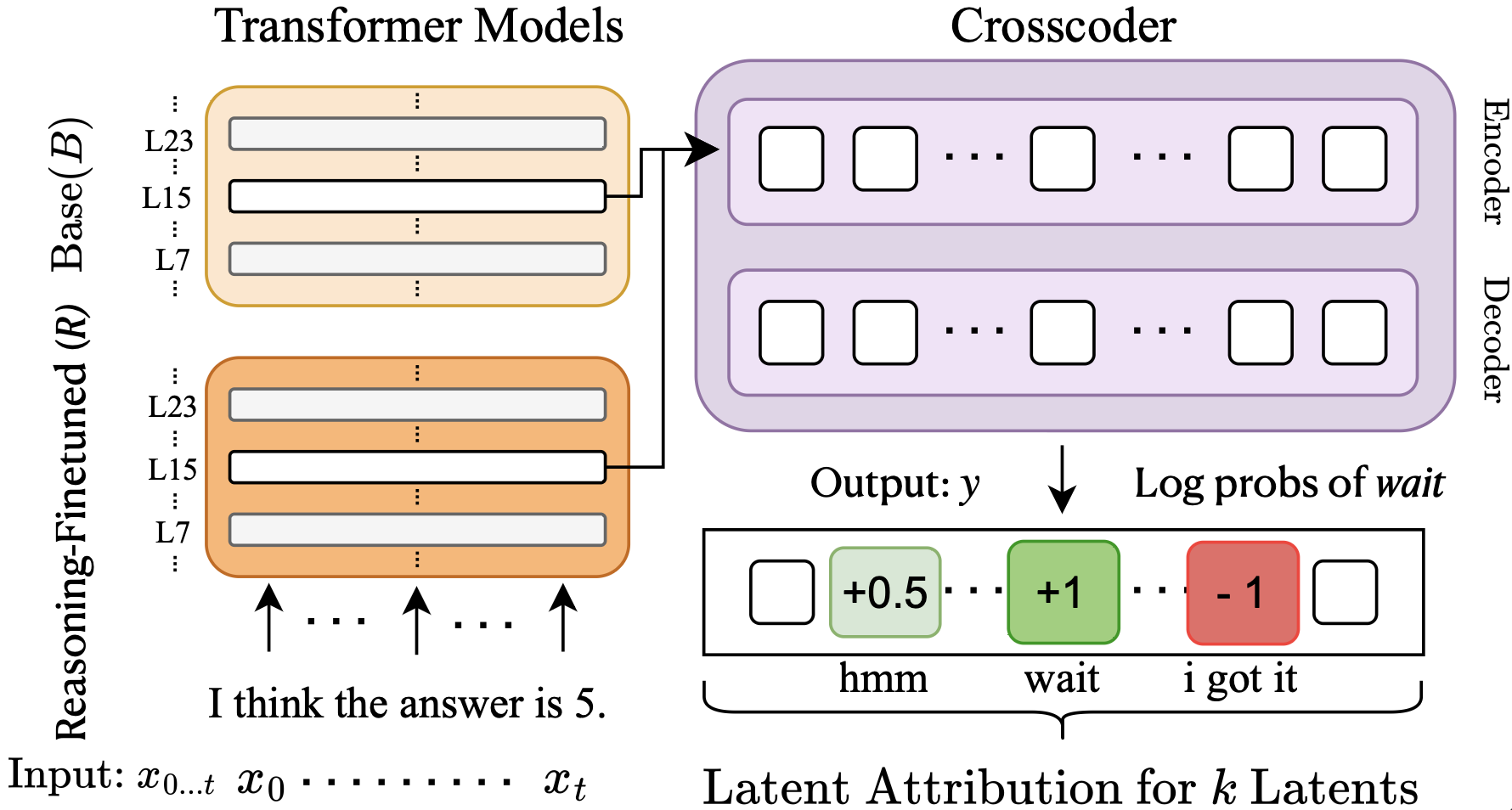

In this work, we explore if the model's latents preceding 'wait' tokens contain relevant information for modulating the subsequent reasoning process. We train sparse crosscoders at multiple layers of DeepSeek-R1-Distill-Llama-8B and its base version, and introduce a latent attribution technique in the crosscoder setting. We locate a small set of features relevant for promoting/suppressing 'wait' tokens' probabilities. Finally, through causal interventions, we show that many of our identified features are relevant for the reasoning process and give rise to different types of reasoning patterns such as restarting from the beginning, recalling knowledge, and expressing uncertainty.

How to find reasoning features?

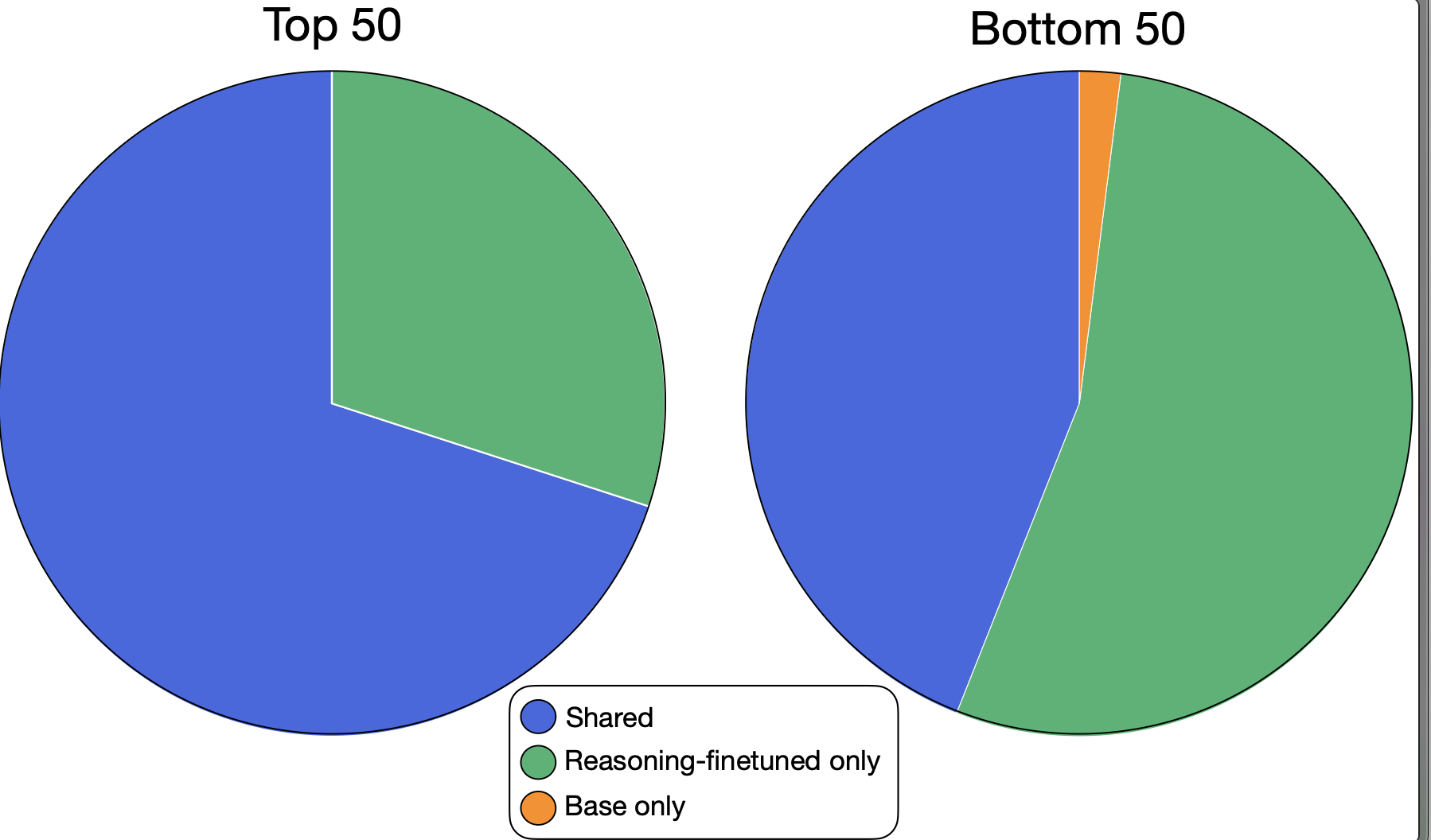

To discover features, we first train sparse crosscoders, - a technique that jointly decomposes activations from two different models (a base model and a reasoning-finetuned model). This allows us to classify their learned features into three categories: base-only, shared, and reasoning-finetuned. We then apply latent attribution patching to filter for features that strongly modulate the 'wait' token. This gives us a 'top 50' list (features that promote 'wait') and a 'bottom 50' list (features that suppress 'wait').

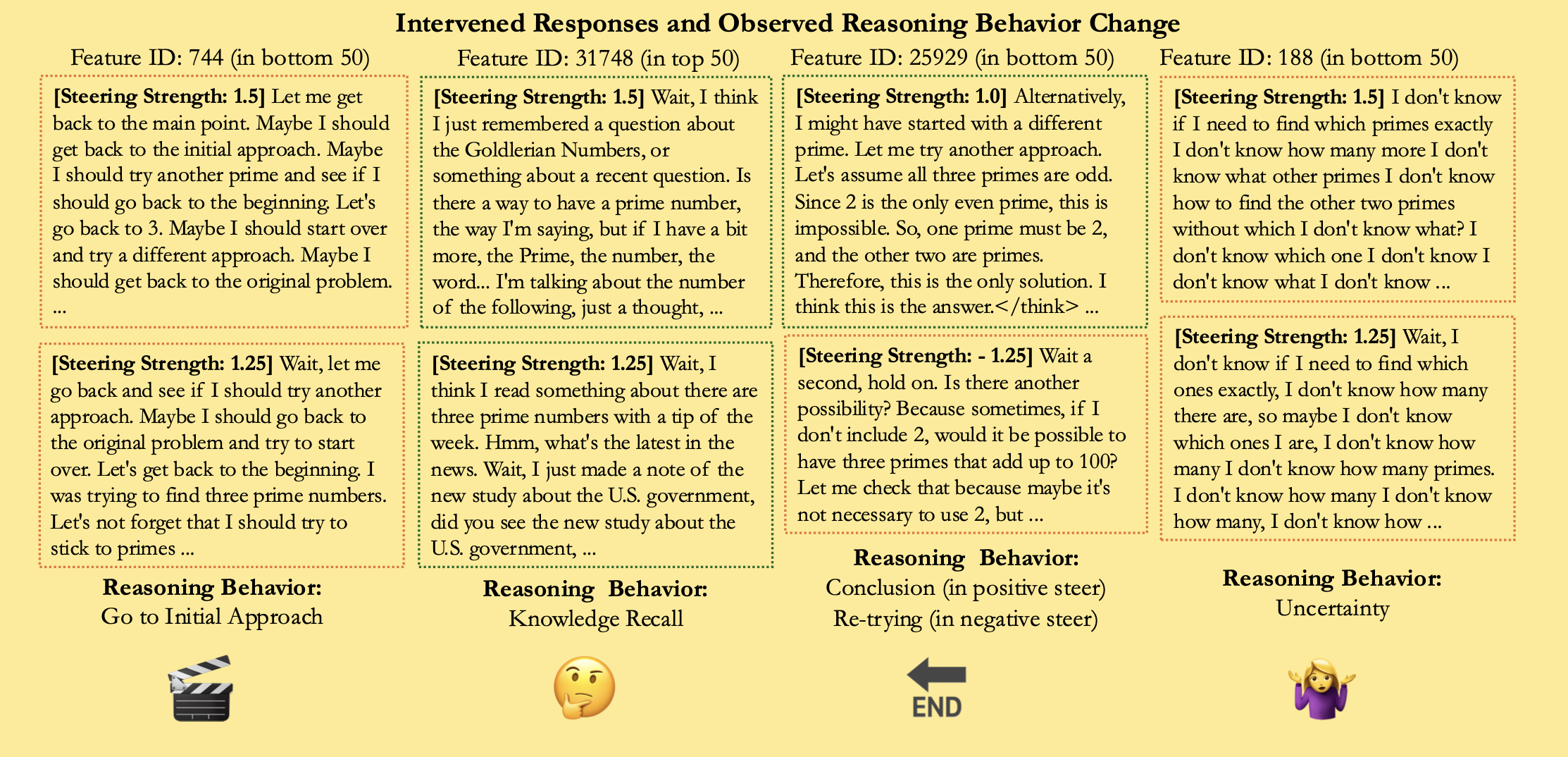

What do they do?

Through activation steering and analyzing max activating examples, we show that many of our identified features are relevant for the reasoning process and give rise to different types of reasoning patterns. Internal states before 'wait' encode different reasoning strategies such as restarting from the beginning, recalling prior knowledge, expressing uncertainty, and double-checking.

Interestingly, the bottom features (suppressing 'wait') lead to novel reasoning patterns not previously documented. Among the top features (promoting 'wait'), steering positively often results in degenerate sequences like "WaitWaitWait...", while negative steering removes 'wait' entirely. We also found features that, when steered, either lead the model to wrap up and provide a final response or extend the reasoning process.

Where do they come from?

By utilizing the crosscoder's classification of features into base-only, shared, and reasoning-finetuned, we found that surprisingly many reasoning-related features fall into the bottom bucket (suppressing 'wait'). This suggests that both amplifying and suppressing 'wait' are of key importance to reasoning.

As expected, none of the top features are base-only. Most interestingly, the bottom features contain a larger number of reasoning-finetuned-only features. This suggests reasoning tuning allocates a substantial number of features to both suppressing as well as promoting 'wait' token probabilities.

The bottom features also contain some base-only features - we hypothesize that some of the features that only the base model uses would decrease the likelihood of the 'wait' token in reasoning sequences, since the base model was not trained to predict the 'wait' token in such contexts.

What information do they contain?

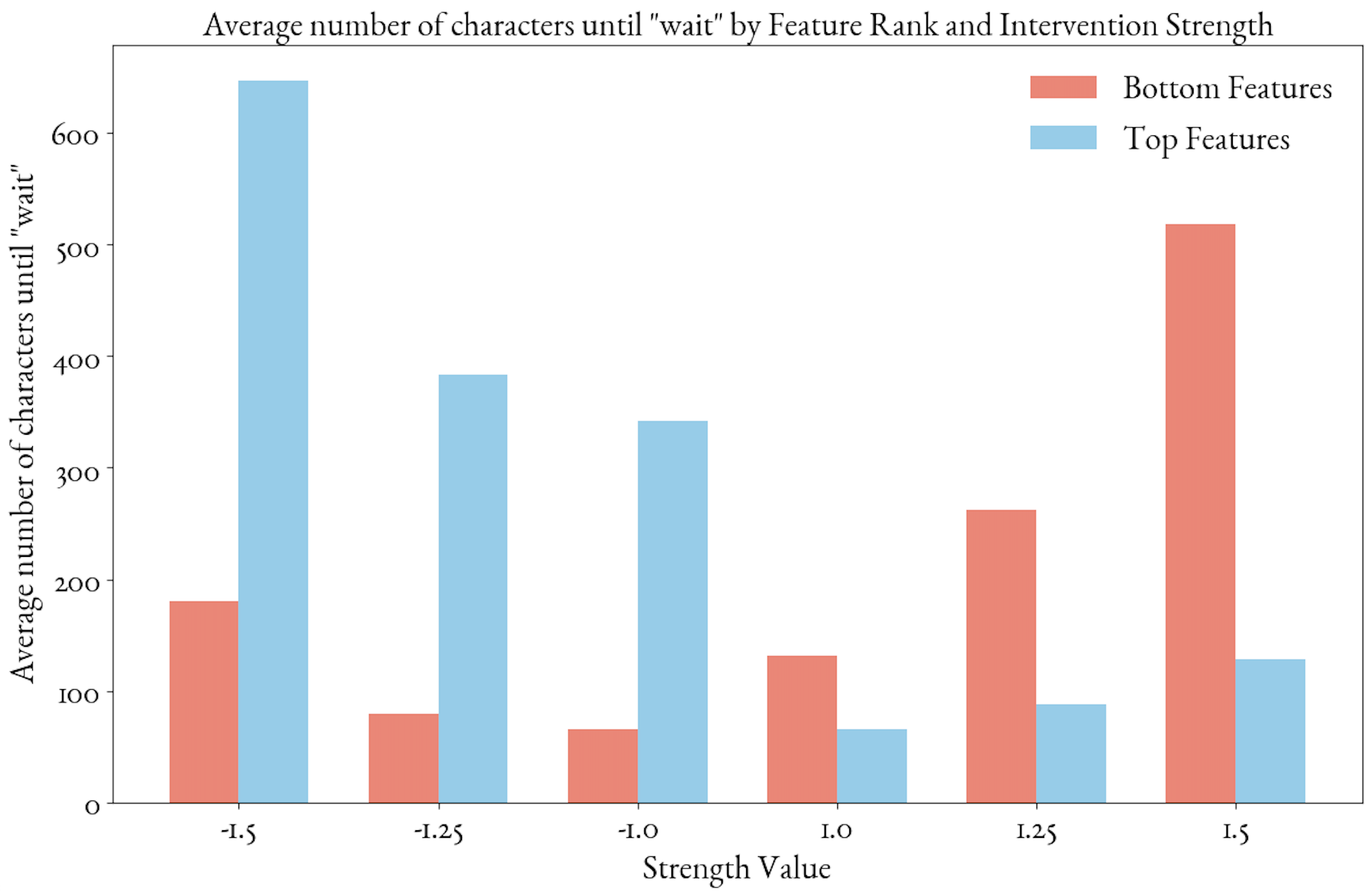

To verify that our features causally influence reasoning behavior, we steer the model by adding scaled feature activations during generation. We measure how many characters appear before the first 'wait' token. As expected, steering top features negatively (or bottom features positively) increases the distance to 'wait', confirming these features directly modulate when the model enters reasoning mode.

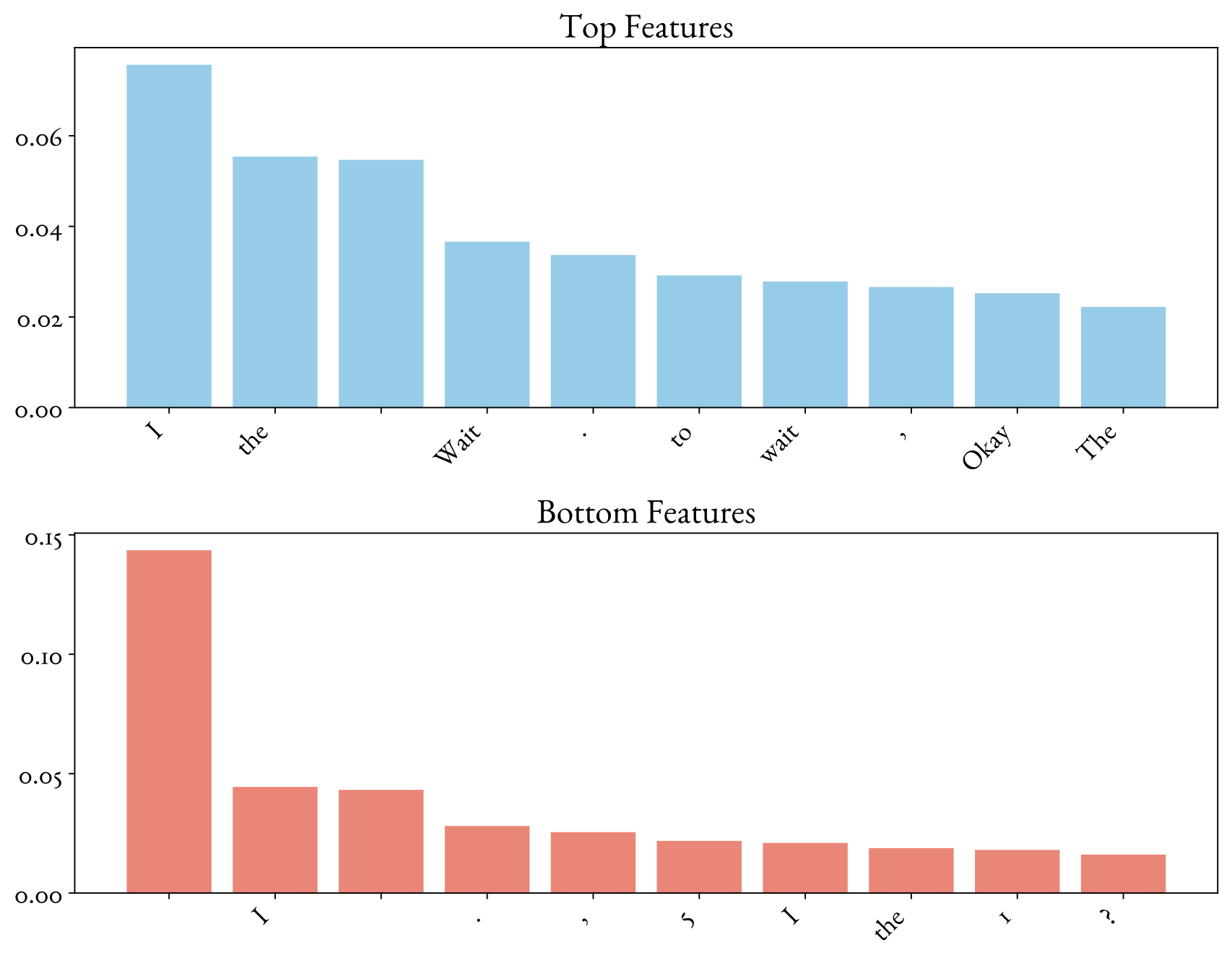

As an additional check, we used Patchscope, an intermediate decoding technique, to investigate the information contained in our selected features. We compute the average next-token distribution for the top and bottom feature groups.

How to cite

The paper can be cited as follows.

bibliography

Dmitrii Troitskii, Koyena Pal, Chris Wendler, Callum Stuart McDougall, Neel Nanda. "Internal states before 'wait' modulate reasoning patterns." arXiv preprint arXiv:2510.04128, 2025.

bibtex

@inproceedings{troitskii-etal-2025-internal,

title={Internal states before wait modulate reasoning patterns},

author={Troitskii, Dmitrii and Pal, Koyena and Wendler, Chris and McDougall, Callum Stuart},

booktitle={Findings of the Association for Computational Linguistics: EMNLP 2025},

year={2025}

}